Introduction

Suppose your customer wants to calculate the weight of the components that makes up your product (Bill of Material aka the BOM). This blogpost is a description about summarizing and calculating of quantities and the weight of products and sales.For this blogpost I've used the explanantion of Exceleratorbi.com.

The Data

So, I've created a small example of the problem. There are three tables in the sample workbook and they are a Material (Product), BOM and a Sales facttable. In order to make the example more interesting I've created some data like car, bicycle and others, together with the parts that made them up like wheels, frame, steering wheel. Lastly a fact with the sales data is created where a quantity tells how many was sold on a day.

So. I'm interested in knowing the weight of the materials and also knowing the weight of the parts of the Material (Product).

The data model

In the diagram I've plotted the datamodel. The keys of the DimMaterial table are propagated in different directions and not in one direction we 'normally' use in dimensional modelling.The DAX expressions

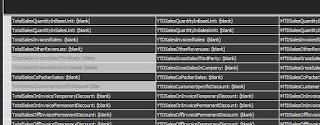

For the initial implementation of the problem in the workbook the following DAX expressions are used. Pay attention to the TotalMaterialWeight DAX expression calculation. This DAX expression uses a SUMX DAX function. It calculates the weight on a row-by-row manner. SUMX is an interator and is less efficient that the SUM function. Normally, I don't use the SUMX function very often, but in this case it is needed because you need to multiply the rows first and then sum it up.

TotalQuantity:=SUM(FactSales[Quantity])

TotalBOMWeight:=SUM(DimBom[BOMWeight])

TotalMaterialWeight:=SUMX(DimMaterial;[TotalQuantity]*[TotalBOMWeight])

The first DAX expression calculates the quantity sold in the FactSales, the TotalBOMWeight expression calculates the weight of the individual BOM parts and the TotalMaterialWeight calcualtes the summarization of the multiplication of the TotalQuantity and TotalBOMWeight.

The outcome

Below a first impression of the Pivottable based on the PowerPivot model and here you can see that the TotalQuantity, the TotalMaterialWeight are calcualted correctly. Next to the pivottable I've included a manual calculation and it is the same as the automated calculationBelow a screenshot of the weights of the BOM parts and that calculation is also correct.

How to improve the model

Now there is something wrong with the TotalBOMWeight. It is 1225 on every row and that doesn't seem right. The problem is that the relation towards the DimBom is not enhanced in a standard manner. In PowerBI Desktop you can set the relation to be bi directional in order to make it work. Now, in order to change this behavior in PowerPivot you have to add some black magic to the DAX expression.

TotalSalesQuantity:=CALCULATE(SUM(FactSales[Quantity]);DimBom)

TotalBOMWeight:=Calculate(SUM(DimBom[BOMWeight]);FactSales)

TotalMaterialWeight:=SUMX(DimMaterial;[TotalSalesQuantity]*[TotalBOMWeight])

The sums of TotalSalesQuantity and TotalBOMWeight are now wrapped with a CALCULATE function and this handles the calculation of the TotalBOMWeight better.

For a full explanation of this solution, see the blogpost of Exceleratorbi.com.

Conclusion

This blogpost is about the calculation of measures that is not a standard pattern. The method descibed handles a calculation of the total weight of Materials (products) sold and their BOM parts (components). We also explored the problem of bidirectional relations.

Greetz,

Hennie