DTS.Executable ExeForSQLTask = pkg.Executables.Add("STOCK:SQLTask");

DTS.TaskHost TKHSQLHost = (DTS.TaskHost) ExeForSQLTask;

TKHSQLHost.Name = "This is a programmed SQL Task";

ExecuteSQLTask MySQLTask = (ExecuteSQLTask)TKHSQLHost.InnerObject;

The last step is optional (i call this design time validation) . This uses the ExecuteSQLStep object for adopting the properties and methods of the Execute SQL task. It’s also possible to do something like this:

taskHost.Properties["SqlStatementSource"].SetValue(taskHost, "SELECT * FROM sysobjects");

This is what i would define as "runtime validation" of the properties. So the runtime validation method was my plan B but i didn’t want to give up that soon. I finally managed to get some answers.

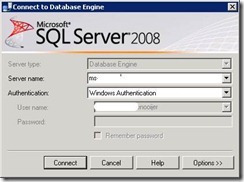

First, Someone at the MSDN SSIS forum came up with the idea that i had to add a reference to the right dll for the ExecuteSQLTask. So i did.

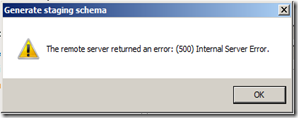

Also i had to add the following using command. "using Microsoft.SqlServer.Dts.Tasks.ExecuteSQLTask;" Still i wasn't finished.After this correction the following error occured:

Unable to cast COM object of type 'System.__ComObject' to class type 'Microsoft.SqlServer.Dts.Tasks.ExecuteSQLTask.ExecuteSQLTask'. Instances of types that represent COM components cannot be cast to types that do not represent COM components; however they can be cast to interfaces as long as the underlying COM component supports QueryInterface calls for the IID of the interface.

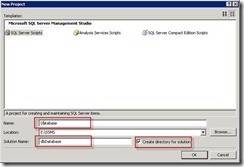

Finally James Beresford on MSDN forums came up with the answer i was looking for. I was using the ‘wrong’ .NET framework (4 instead of 3.5)! So i changed the .NET framework to 3.5 and it worked great! There were some warnings about System.Csharp reference. I removed it (fingers crossed) and the warnings disappeared.

Hennie

![Solution_explorer[1] Solution_explorer[1]](http://lh3.ggpht.com/_EXwlynuU_Cw/TQzNo_o3WFI/AAAAAAAAAd4/BnrwNCADOPk/Solution_explorer1_thumb.png?imgmax=800)

![clip_image005[1] clip_image005[1]](http://lh3.ggpht.com/_EXwlynuU_Cw/TQzNsepBfqI/AAAAAAAAAeg/8t4yHmBOTuw/clip_image0051_thumb.gif?imgmax=800)

![clip_image011[1] clip_image011[1]](http://lh3.ggpht.com/_EXwlynuU_Cw/TQzNvG_CERI/AAAAAAAAAe4/1jFxXTeGZmE/clip_image0111_thumb.jpg?imgmax=800)