Introduction

Normally I would log into Snowflake with Signle Sign On or with Username and Password, but I wanted to know more about Key Pair Authentication, especially in relation with Snowsql. I have a couple of scripts that I use for installing into Snowflake. And with Snowflake you can do this with the command line tool Snowsql.

Key pair authentication

As the help pages indicate, Snowflake supports key pair authentication for enhanced authentication security as an alternative to basic authentication (i.e. username and password). The authentication method uses a 20248 RSA key pair. It seems that it is possible to generate Private key with OpenSSL. Let's try this out.

And the Client tools like Snowsql (but I also saw it in Visual Studio Code) can use this Key Pair Authentication method to connect. In this blogpost I will investigate the Key Pair authentication with SnowSQL and with Visual Studio Code.

Steps

There are six steps to take in order to use the Key Pair Authentication successfully :

- Step 1: Generate the Private Key

- Step 2: Generate a Public Key

- Step 3: Store the Private and Public Keys Securely

- Step 4: Assign the Public Key to a Snowflake User

- Step 5: Verify the User’s Public Key Fingerprint

- Step 6: Configure the Snowflake Client to Use Key Pair Authentication

Step 1: Generate the Private Key

The first step is to generate the Private key with SSL. Now, if you try to enter the OPENSSL command in Windows, it will not work :

You can can visit the site Openssl.com in order to download the openssl.exe for Windows. But what I found out is that you can use the Git installation for Windows. The OpenSSL.exe is also available at the location : C:\Program Files\Git\usr\bin\

I added the path to the path variable in the Environmentvariables section. Do this : Right click on your computer (This PC) in the windows explorer and choose properties, then choose Advanced Systems Settings, then Environment variables and I added the Path to the System variable Path

And now I can execute the openssl command (according to the Snowflake help documentation):

openssl genrsa 2048 | openssl pkcs8 -topk8 -v2 des3 -inform PEM -out rsa_key.p8

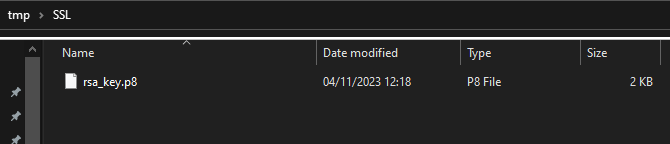

At first glance, it seems that nothing happened but there is a small file created on the disk :

And in this file the encryption key is stored (I show here a small snippet of the key) :

Public keys and private keys are the working parts of Public-key cryptography. Together, they encrypt and decrypt data. The public key is public and can be shared widely while the private key should be known only to the owner. So be careful with the private key.

This was the first step of key pair authentication and it was about generating the private key. Now let's have a look at generating public keys.

Step 2: Generate a Public Key

Now as Snowflake help documentation is stating : From the command line, generate the public key by referencing the private key. The following command assumes the private key is encrypted and contained in the file named rsa_key.p8.

openssl rsa -in rsa_key.p8 -pubout -out rsa_key.pub

You need to enter the pass phrase again in order to generate the public key. When this succeeds a new file is generated on the disk. In this case rsa.pub.

And in this file the public key is stored :

Step 3: Store the Private and Public Keys Securely

Off course, you need to store the Private key and the Public key (and I would store the pass phrase also) in a secure place. The files (especially the private key) should be protected from unauthorized access.

Step 4: Assign the Public Key to a Snowflake User

The next step is in snowflake. Let's create a user and assign the public key to this user.

USE ROLE SECURITYADMIN;

// create user with password authentication

CREATE USER INSTALL_USER_PRD

RSA_PUBLIC_KEY = 'MIdfsasdfsdfadsRT6xJnEB8+p

zzzzzzzzzzzzzzzzzzzzzzzzzzzzzzzzzzzzzzzzzzzzzzzzzzzzzzzzzzzzzzzz

tttttttttttttttttttttttttttttttttttttttttttttttttttttttttttttttt

wwwwwwwwwwwwwwwwwwwwwwwwwwwwwwwwwwwwwwwwwwwwwwwwwwwwwwwwwwwwwwww

aaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaa

qqqqqqqqqqqqqqqqqqqqqqqqqqqqqqqqqqqqqqqqqqqqqqqqqqqqqqqqqqqqqqqq

ssssssss'

DEFAULT_ROLE = SYSADMIN;

// grant usage on the default role

GRANT ROLE SYSADMIN TO USER INSTALL_USER_PRD;

Step 5: Verify the User’s Public Key Fingerprint

With the following command you can check the Public key of a user

DESC USER INSTALL_USER_PRD;

Resulting in :

Step 6: Configure the Snowflake Client to Use Key Pair Authentication

The last step is to configure the clients for using the Key Pair authentication. We have two tools : SnowSQL and Visual Studio Code where I want to try out the Key Pair Authentication.

Experiments with SnowSQL

On the Snowflake documentation there is a

help page that helps you connecting with Snowflake with Snowsql. Her is an example of a connection with Key pair authentication. I moved the accountname and databasename to the config file.

snowsql -f D:\Git\DeployTest\CREATETEST2.sql

--private-key-path D:\tmp\SSL\rsa_key.p8

--config D:\Git\DeployTest\configEnter the pass phrase :

And it will start executing the script as follows :

But when I try to execute multiple scripts it will prompt again (and again)for the pass phrase :

The documentation about the topic of Snowflake is a bit confusing. Either you choose to use the configuration file or the Command line, both uses the SNOWSQL_PRIVATE_KEY_PARAPHRASE environment variable to store the Private Key Pass Phrase.

For this mock up, I used the system environment variables for storing Private Key Pass Phrase password.

And now, all the files of Snowsql are executed without asking for the pass phrase.

Visual Studio Code

In visual Studio there are 3 ways of connecting : SSO, USername and Password and Key Pair authenication. Oh, and you need the Snowflake Driver for Visual Studio Code. Enter the accountname, Key pair and the private key path of the rsa file.

And now you are able to execute scripts in Visual Studio Code.

Final Thoughts

Allthough happy that it works I'm not completely happy that the "Private Key Pass Phrase" is stored in the Environment variable. There are a couple of problems with that:

- In company controlled systems you can't adjust the environment variables because that is part of the windows system.

- Is it save to store passwords in Environment variables or in files in plain text? This is something that I saw in the config file :

"We need to pay attention that a password is stored in a file" : I my opinion there should never be hardcoded passwords stored in plain text on systems (not in files and not environment variables). To me it feels very unsecure. Even when you secure the file with windows security.

My 2 cents,

Hennie