Introduction

This is the second blogpost in a series about Datavault. The first blogpost in this series was about the basics of Datavault and in this blogpost I would like to discuss the adaptability of the datavault model. Why is Datavault an adaptive method for designing your datawarehouse? I think there are three reasons why Datavault is an adaptive Datamodel approach:

- A fundamental reason: Separating the keys, relationships and the descriptive data in separated tables enhances the flexibility and agility of the model.

- You can easily add Satellites (within a Concept) to the model without breaking the existing model.

- You can easily add Links (a new Concept or a new Concept Constellation) to the model without altering the existing model.

In this blogpost I'll discuss the adaptive characteristics of the datavault model in more depth..

Adding Satellites

A satellite doesn't contain businesskeys. It stores (all) information about the businesskey (hub) and historizes all the information about the concept. It inherits the surrogate key of the hub (or link). In order to historize, the primary key is extended with a Date/Time Stamp. In this way the Satellite acts as a Type II dimension (SCD2). The Satellite stores every change that happens in the source.

Now let's see what happens when structural changes happens in a operational system that acts as a source for a datavault data warehouse.

1) Initial situation.

In this initial situation there is a hub and one satellite. This situation occurs most often during the start of a data warehouse in a production state. The hubs and satellites are designed and ready for usage.

2) Extra attributes added to a table in the sourcesystem.

Now, something happens in the operational system. Some tables are extended with extra fields. These extra fields should be propagated to the data warehouse. Now, the strength of the data vault model shows up. Nothing changes to the exisiting model. The extra fields should not be integrated in the existing satellite but create a new satellite and add this to the hub. Integrating the fields in the existing satellite causes difficulties like dropping and recreating the table.

On Linkedin I had a discussion about changing source systems..I'll blog about this in the future.

Adding Links

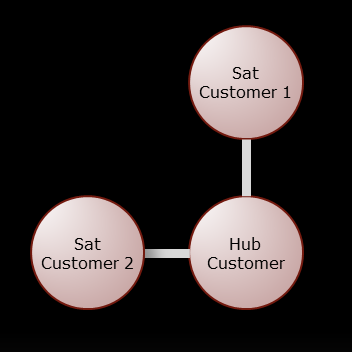

The same is true for links. The hubs are the anchors of the model. These anchors provide steadyness of the model and anchors provide satellites and links with surrogate keys. Below and example of a link table and a hub table. The hub provides the link with a surrogate key and this principle helps to connect other concepts and other concept constellations (see part I).

Suppose you have the following situation. There is an old piece of data warehouse and a new piece (concepts) of data warehouse is build.

Now another advantage of the Datavault comes into play. The new concept is linked by the link tables with the old piece of the data warehouse. And, nothing changes in the current datawarehouse. Starmodels, reports and analysis build on top the Datavault model continues to function properly.

Conclusion

The Datavault model is adaptive because of the separation of the businesskeys, the relations and the descriptive information. If you condense the data like the star model, the model will become less adaptive. It will be harder to change the model. By separating the different kinds of data, the model become more resilient to changes. By adding satellites and links to the model and not changing current satellites and links (and hubs) the current reports and (user) queries will remain running. This way the datavault model lends itself for extending the model without breaking the current model.

The star schema is less flexible for storing datawarehouse information because we have only two flavours: Facts and dimensions. Therefore Datavault is more suitable for storing datawarehouse information than star schemas.

Greetz,

Hennie