Introduction

Hi everyone, in this blogpsot I'll experiment with Data Factory in Microsoft Fabric. I'll create a Data lake in the Data Lakehouse experience and I'll load some data from an Azure SQL Database, which I've prepared for this blogpost with a demo database AdventureWorksLT and w'll load the data in the Data lake.

Let's go and see how it works.

Setting up a Demo database in Azure SQL Database

Firstly, I've created a SQL database in Azure for this blogpost. Go to Microsoft Azure and search for Azure SQL database and click on Create.

These are the standard settings for a SQL Database and I choose nothing to change here.

For the simplicity for the demo I choose SQL authentication for authorization.

I take the smallest database setting in order to save expenses.

Click through the tabs until you see the the

Additional Settings. Here I select the AdventureWorksLT Database.

And we are finished in Microsoft Azure.

Step 1 : Create the Data Lakehouse

The next step is to create a Lake house in Microsoft Fabric. Click on Lake House (Preview).

Enter a name for the Lake house and press Create.

There are "Files" and there are "Tables" folders in Lake House. Now the tables are managed by Fabric and the files are unmanaged. In the files section we are going to build a Data lake.

Create a Subfolder for a location to put the files in the Folderstructure.

Create the Pipeline in data factory

Choose Data factory in the Fabric. Renember to select the right Workspace in which you created a (free trail) capacity. Another workspace will instruct you to create a new Fabric capacity.

And then click on the Data Pipeline. This will open the Data factory.

Next step is enter a name for the Pipeline. I'm already experimenting with some naming convention. Later, I will come up with some proposals for naming conventions.

Choose "Add Pipeline activity"

When you choose to add a pipeline activity, a context menu opens and you can choose the "

Lookup" activity.

And now the activity is created on the canvas of Data Factory.

And here we select "

Azure SQL Database"

And click on "Continue" when you have selected the "Azure SQL Database" option

And a new window opens where the credentials and connection strings must be entered in order to connect to the SQL Database.

Now let's get some information from Azure SQL Database settings in order to connect the data pipeline with Azure SQL Database.

An error happens because Fabric is not allow to connect to the SQL Database Server.

I add a firewall rule in Azure :

Above is not correct, I haven't figured out what the best ranges are for the Firewall settings. I'll look into this later.

Next, an error occurred when I try to connect and it keeps appearing.

I decided to recreate the connection and then it worked fine. I think that is one of the things of a public preview version. You have to accept it and try to workaround it.

Now it succeeds with the metadata Lookup. It will load the metadata information of the tables in Pipelines. Enter the Connection to Azure SQL Database, the Connection Type and change the option "Use Query" to Query, enter the meta query to query the Information_Schema and don't forget to uncheck First Row Only

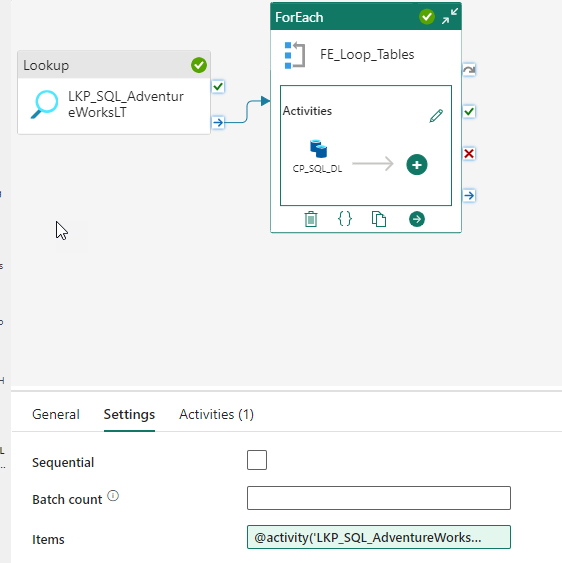

Next step is to add a

For Each activity in the ADF, give it a proper name and click on the plus in order to add a

Copy Data activity in the For Each activity.

Then, select the Copy Data Activity.

Don't forget the settings on the ForEach Loop!

Enter the expression @activity('LKP_SQL_AdventureWorksLT').output.value in the Items box

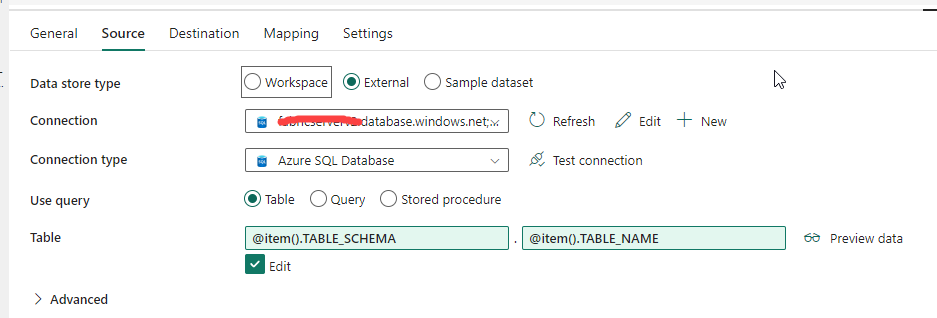

Now in the Copy Data Activity, go to Source tab and set the right settings in the entry boxes.

And change the destination tab in the Copy Activity as is shown in the following screenshot :

Change the File path to the follwoing expression:

@concat('LandingZoneOneLake/', item().TABLE_SCHEMA, '/', item().TABLE_NAME, '/')

And run the Data Factory pipeline and check if it worked. I made some errors with the expresion by referencing the wrong fields in the metadata query, but it was fixed fairly quickly.

And in the lake house the following folders and files are created:

Final Thoughts

Overall it was easy to build this solution in Microsoft Azure Fabric. There are still some bugs and you need some workarounds in the Microsft Fabric to make it work. But, I'm quite positive about the way Microsoft Fabric works.

For more inspriation I would to point you on this

YouTube video by Stijn. I used this for inspiration for this blogpost.

Hennie

Geen opmerkingen:

Een reactie posten