Introduction

The Bronze layer is something that we have heard from the Data Lakehouse buzzwords and it fits in the medallion architecture of DataBricks. The Bronze layer is the first layer in the medallion architecture and the other layers are Silver and Gold layer.

From the DataBricks site :

"The Bronze layer is where we land all the data from external source systems. The table structures in this layer correspond to the source system table structures "as-is," along with any additional metadata columns that capture the load date/time, process ID, etc. The focus in this layer is quick Change Data Capture and the ability to provide an historical archive of source (cold storage), data lineage, auditability, reprocessing if needed without rereading the data from the source system."

Ok, it seems to me that this is comparable with the Landingzone or Staging area that we know from the data warehousing. The main difference here is that that in Data warehousing you have define the tables with there respective columns and data type (Schema-on-Write) and in the Data Lakehouse the data is stored in so called files.

I'm not saying that this is THE new data architecture you have to build, it's in concrete and you have to do it this way. For me it's a learning curve and I'm trying to understand the medallion architecture and see how it compares with a Data Warehouse solution. In my opinion a Data Warehouse is a solution and Data Lakehouse is more technology driven. You can build Data Warehouses in different tools/ platforms. You already see an adoption of Data warehouse best practices in Data Lakehouse solutions, for instance star schemas. Sessions at DataBricks also talks about building a Data warehouse in a Data Lakehouse.

By the way, this blogpost is inspired by the YouTube videos of Stijn. If you want to know more of this, you can watch the video.

Let's build a Bronze layer

Ok let's go to Microsoft Fabric! I've created a new Lake House called : "LH_Bronze". I'm a bit experimenting with naming conventions;-). When you define a new Lake House it will create three items : a lake house, a standard dataset and a SQL endpoint.

Next is to choice a solution on how to move the data from the Landingzone to the Bronze Layer (I have a bit of mixed feelings about the a Landing zone and a Bronze Layer). Every copy of data is a kind of waste according to the Lean methodology.

For this reason I choose the Notebook way in Microsoft Fabric. Let's create a notebook in Microsoft Fabric.

And the notebook is created right away. I don't have to enter a name for the Notebook.

First let's add a Lake house tot the Lake house explorer.

And then you can choose to add a new lake house or use an existing lakehouse. I choose to use an Existing lakehouse.

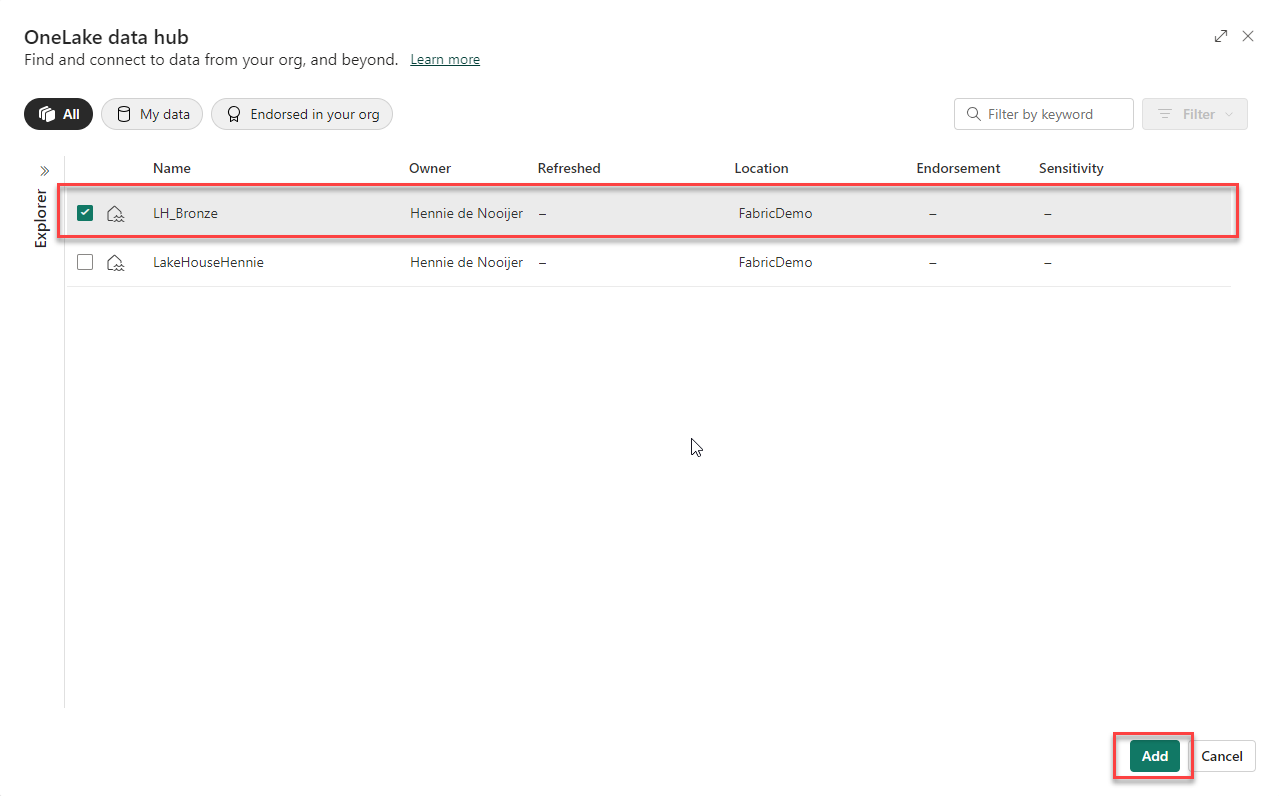

Now in the next window I can select a Lakehouse. I choose the "LH_Bronze" Lake House.

Next, in the workspace list of items you can see I've created a Notebook.

Create a shortcut to the Landingzone Lakehouse

First go to the LH_Bronze Lakehouse.

And click on the three dots (...) next to the Files and the context menu opens :

Choose New Shortcut in order to create a shortcut. Choose the internal Microsoft Fabric Onelake

Next, you have to select a Lake House or a Data Warehouse. I choose a Lakehouse. There are two options here : The Landingzone Lakehouse and the Bronze Lakehouse (you can create a shortcut to same Lakehouse? Interesting). Click on Next.

And then you have to further specify the location in the Lakehouse where you want to point the shortcut to.

And click on Create.

Now let's go back to the notebook.

Build a load script in Notebook

Now, we have created a shortcut and let's see how it looks now in the notebook. don't forget to refresh.

Now, the next step is to create some scripts let's create some script in order to read the Product into a data frame.

LandingZoneLocation = "Files/LandingZoneOneLake/SalesLT/Product"Product = spark.read.load(f'{LandingZoneLocation}', format='parquet')display(Product)

Next, We add some meta columns to the dataframe : a User that loaded the data, a LoadTimeStamp and a SHA hash.

from pyspark.sql.functions import *Productdf = (Productdf.withColumn('LoadUser', lit(f'{LoadUser}')).withColumn('LoadTimeStamp', current_timestamp()).withColumn('RowHash',sha2(concat_ws('.',col('ProductID'),col('Name'),col('ProductNumber'),col('Color'),col('StandardCost'),col('ListPrice'),col('Size'),col('Weight'),col('RowGuid'),col('ModifiedDate')), 256)))display(Productdf)

Next is a script pattern, that will make distinction between an initial load or incremental load. You can set this variable in the beginning of the script and may be it's possible to parameterize this, although there may be better solutions, in my opinion.

import deltaif IsInitial == 1:spark.sql(f'DROP TABLE IF EXISTS {DatabaseName}.{TableName};')Productdf.write.format('delta').saveAsTable(f'{DatabaseName}.{TableName}')if IsInitial == 0:ProductdfDelta = delta.DeltaTable.forPath(spark, f'{DeltaTableLocation}')ProductdfDelta.alias('delta').merge(Productdf.alias('df'), 'delta.ProductID = df.ProductID').whenNotMatchedInsertAll().whenMatchedUpdateAll(condition = 'delta.RowHash <> df.RowHash').execute()

Final thoughts

Yes, well an interesting YouTube video of Stijn helped me on the way on how to build this example of a script on how to load data into a Bronze layer in Microsoft Fabric.

Hennie

Geen opmerkingen:

Een reactie posten