Introduction

Hi Everyone! It has been a while since I posted a blogpost. Time to post something new. Today I would like to discuss dbt with Snowflake. What is it? And how do you set up dbt with Snowflake. For this blogpost I'll use the tutorial of dbt to follow the step by step guide.

As mentioned in the tutorial, the basic steps are :

- Set up a warehouse with sample data.

- Connect the warehouse to dbt Cloud.

- Add a Git repository to dbt Cloud (not in the blogpost).

- Execute a dbt transformation using dbt run.

- Schedule a job or transformation.

Let's go..

Set up a warehouse with sample data

First step is to create dbt in the cloud for the most common data platforms : Bigquery, Databricks or Snowflake. I choose Snowflake.

CREATE WAREHOUSE IF NOT EXISTS transforming;

CREATE DATABASE IF NOT EXISTS raw;

CREATE DATABASE IF NOT EXISTS analytics;

CREATE SCHEMA IF NOT EXISTS raw.jaffle_shop;

CREATE SCHEMA IF NOT EXISTS raw.stripe;

create table raw.jaffle_shop.customers

( id integer,

first_name varchar,

last_name varchar

);

copy into raw.jaffle_shop.customers (id, first_name, last_name)

from 's3://dbt-tutorial-public/jaffle_shop_customers.csv'

file_format = (

type = 'CSV'

field_delimiter = ','

skip_header = 1

);

create table raw.jaffle_shop.orders

( id integer,

user_id integer,

order_date date,

status varchar,

_etl_loaded_at timestamp default current_timestamp

);

copy into raw.jaffle_shop.orders (id, user_id, order_date, status)

from 's3://dbt-tutorial-public/jaffle_shop_orders.csv'

file_format = (

type = 'CSV'

field_delimiter = ','

skip_header = 1

);

create table raw.stripe.payment

( id integer,

orderid integer,

paymentmethod varchar,

status varchar,

amount integer,

created date,

_batched_at timestamp default current_timestamp

);

copy into raw.stripe.payment (id, orderid, paymentmethod, status, amount, created)

from 's3://dbt-tutorial-public/stripe_payments.csv'

file_format = (

type = 'CSV'

field_delimiter = ','

skip_header = 1

);

The tables are created and loaded from a s3 bucket. That is something that dbt has setup to help you loading some testdata in the tables.

Let's see if we have some data.

We are now finished with setting up Snowflake and the tutorial code and data.

Connect the warehouse to dbt Cloud

It seems that there are two ways to connect Snowflake with dbt : partner connect or setup the Snowflake connection manually. I choose option 1. The tutorial is written based on the classic interface, so let's see if we can find the partner connect in the new interface of Snowflake.

It's available under the option Admin.

Enter the following information in the screen and press on connect :

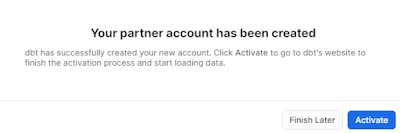

And now I've created and activated the free trail account:

Here is an overview of the options (I choose the developer plan) and confirmed the choice.

It seems there is a need for a small tweak in dbt. Change the name of the warehouse and database to analytics and transforming, respectively.

And now we can initialize the project and start the development. After pressing Start developing the following window appears.

Execute a dbt transformation using dbt run

Now let's build a model and a model is a select statement (!). Strange but ok. W'll see why dbt a select statement calls a model. Let's press on Create a new branch.And now we have to create a file in de models folder called models/customers.sql

With the following content :

And with dbt run we can check the model (query) :

Here is an example screen where I've created three models stg_customers, stg_orders and customers and customers references the stg_customers and stg_orders. stg_customers references the other models with a ref tag.

Now we can add a schema file to the project. It describes the structure of the tables and with some more constraints like 'unique'. I wonder whether dbt checks the uniqueness of the column. Run the test with dbt test.

The code (plus documentation):

version: 2

models:

- name: customers

description: One record per customer

columns:

- name: customer_id

description: Primary key

tests:

- unique

- not_null

- name: first_order_date

description: NULL when a customer has not yet placed an order.

- name: stg_customers

description: This model cleans up customer data

columns:

- name: customer_id

description: Primary key

tests:

- unique

- not_null

- name: stg_orders

description: This model cleans up order data

columns:

- name: order_id

description: Primary key

tests:

- unique

- not_null

- name: status

tests:

- accepted_values:

values: ['placed', 'shipped', 'completed', 'return_pending', 'returned']

Schedule a job or transformation

Next step is to run a job. First we need to commit the code and create a new environment.

Final thoughts

I've only scratched the surface of the tool dbt with this blogpost, but dbt shows a lot of maturity in the development, deployment and even testing the structures.

Hennie

Geen opmerkingen:

Een reactie posten