Introduction

Snapshots in DBT are directly connected with Slow Changing Dimensions TYPE 2 (SCD). A snapshot in DBT tracks the differences in the data over a period of time and creating a history for every row. The confusing part here is that in Dimensional modelling you have two kinds of facts : tranactional and snapshots. A snapshot in dimensional modelling is copy of the source data for every day, week or month or whatever you want. So, DBT calls SCD2 dimensions snapshots, it calls SQL queries models... confusing ;-). Lets dive into DBT snapshots.

This blogpost is a blogpost in a serie of blogposts :

- Build a data warehouse with DBT and Snowflake on AdventureWorksLT (PART I)

- Build Staging and Dimensions in DBT for Snowflake (PART II)

- Sources in DBT for Snowflake (PART III)

- Data freshness for DBT in snowflake (Part IV)

- Snapshots in DBT for Snowflake (Part V)

- Generic tests in DBT for Snowflake (PART VI)

- Singular tests in DBT for Snowflake (Part VII)

- Add packages to your DBT project (Part VIII)

- Adding documentation to your DBT project (Part IX)

- Working with hooks in DBT (Part X)

- Ephemeral tables in DBT (Part XI)

Creating snapshots with DBT

CREATE SCHEMA snapshots;Create a file in the snapshots folder in your DBT project. I created one for Customer.

And put in the content of the file the following code :

{% snapshot Customer_Snapshot %}

{{

config(

target_database ='ADVENTUREWORKS_DWH',

target_schema ='snapshots',

unique_key ='CUSTOMERID',

strategy ='timestamp',

updated_at ='MODIFIEDDATE',

)

}}

select * from {{source('ADVENTUREWORKSLT', 'CUSTOMER')}}

{% endsnapshot %}Explanation :

- Give the snapshot a proper name, eg Customer_Snapshot.

- Define a target database, if you have multiple databases.

- Define the unique key, I would say the Business Key.

- Then the strategy, there are two options here : Check or timestamp. For 'timestamp' you need a column which indicates the change that happened with a certain record. The 'Check' option is used when you don't have this column in the source. I would always the 'check' option because I've been in numerous cases where the modified date is not reliable in a source system.

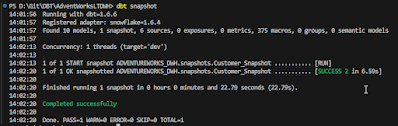

Compiling and building with the following command :

dbt snapshotAnd this results in :

Now, how does this looks like in Snowflake? The same table is created but with some extra columns at the end of the table : DBT_SCD_ID, DBT_UPDATED_AT, DBT_VALID_FROM and DBT_VALID_TO.

Now lets update a column in the source table and check whether this is reflected in the snapshot table.

UPDATE SalesLT.Customer

SET LastName = 'Smith',

MODIFIEDDATE = CAST('2023-12-23 06:00:15.581' AS DateTime)

WHERE CustomerID = 1;and run dbt snapshots again :

And now lets check the snapshot table in Snowflake again :

A new record is created in the snapshot table

You can create a view on top of that and that could be a kind of historical staging area (PSA, HSA).

WITH CTE_STG_CUSTOMER AS (

SELECT *

FROM {{ref('Customer_Snapshot')}}

)

SELECT

SUFFIX

,MIDDLENAME

,EMAILADDRESS

,COMPANYNAME

,NAMESTYLE

,PASSWORDHASH

,CUSTOMERID

,PHONE

,SALESPERSON

,TITLE

,FIRSTNAME

,LASTNAME

,MODIFIEDDATE

,ROWGUID

,PASSWORDSALT

,DBT_SCD_ID

,DBT_UPDATED_AT

,DBT_VALID_FROM

,DBT_VALID_TO

FROM CTE_STG_CUSTOMERAnd dbt run again :

dbt runCheck Snowflake again

Final thoughts

Interesting option here for DBT. It's very easy to add this SCD2 functionality to a table. Sometimes you want the SCD2 functionality right into the dimension too. You need to fgure that out. DBT_VALID_TO is null is a bit unhandy because you can use the between or else you need the use a IFNULL function.

Geen opmerkingen:

Een reactie posten